Σημαντικές Ανακοινώσεις

Καλώς Ορίσατε

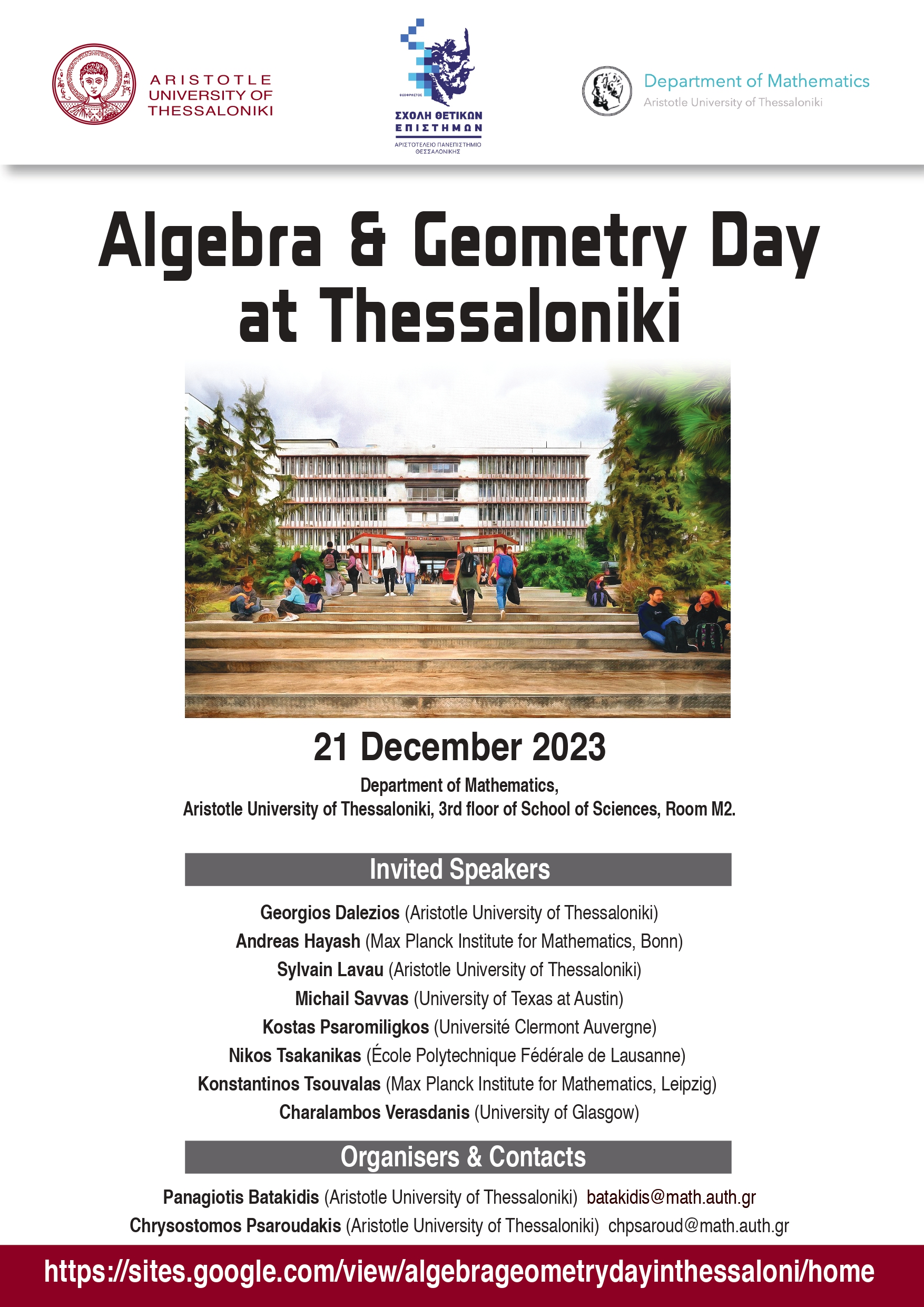

Καλώς ορίσατε στο δικτυακό τόπο του Τμήματος Μαθηματικών του Αριστοτελείου Πανεπιστημίου Θεσσαλονίκης. Το Τμήμα Μαθηματικών είναι από τα αρχαιότερα Τμήματα του Α.Π.Θ. και της Σχολής Θετικών Επιστημών που αρχικά ήταν γνωστή ως Φυσικομαθηματική. Δέχθηκε για πρώτη φορά φοιτητές τον Νοέμβριο του 1928. Ο ιδρυτικός νόμος του πανεπιστημίου Θεσσαλονίκης (ν. 3341/14-6-25) ψηφίστηκε στις 5 Ιουνίου 1925.